Contents

- 1 Overview

- 2 Introduction

- 3 Choosing the right architecture for microservices on AWS

- 4 Containerization with Docker and Kubernetes

- 5 Implementing service discovery with AWS tools

- 6 Strategies for fault tolerance in microservices on AWS

- 7 Best practices for scalability and resilience in Java Microservices on AWS

- 8 Conclusion

Overview

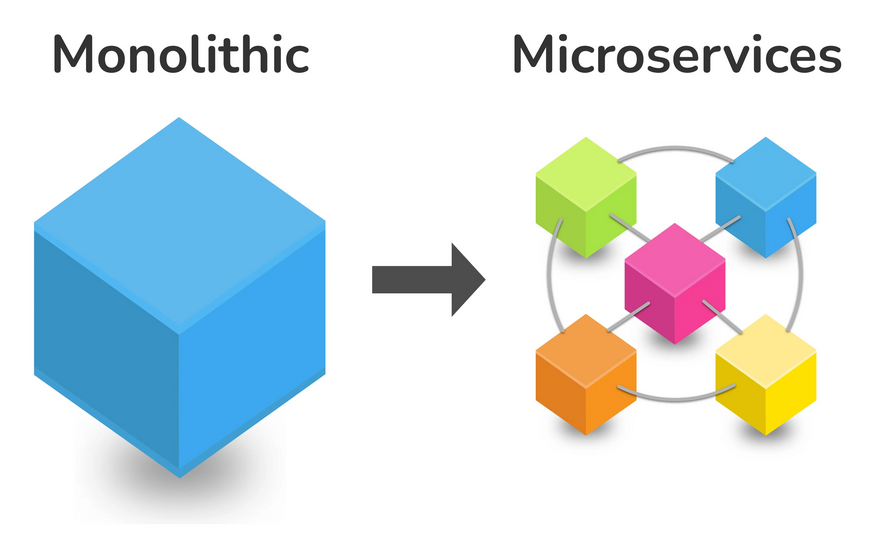

Java Microservices on AWS is a modern approach to building and deploying applications that is gaining popularity in the software development community. The approach involves breaking down a large application into small, independent services, each with its own functionality, and deploying them on a cloud infrastructure such as AWS. This allows for greater scalability and resilience as each microservice can be independently scaled and updated without affecting the entire application.

Scope of the article

- Choosing the right architecture for microservices on AWS

- Containerization with Docker and Kubernetes

- Implementing service discovery with AWS tools

- Strategies for fault tolerance in microservices on AWS

- Security considerations for microservices on AWS

- Best practices for scalability and resilience in Java Microservices on AWS

Introduction

In today’s fast-paced and ever-evolving software development landscape, businesses are constantly seeking new ways to improve the efficiency and effectiveness of their applications. One approach that has gained popularity in recent years is Java Microservices on AWS which is primarily built using Java programming langauge. The use of java quite common being object oriented programming language supporting inheritance, with support of multiple inheritance, polymorphism, abstract classes etc.

This approach involves breaking down a large application into smaller, independent services that can be deployed and managed on a cloud infrastructure such as Amazon Web Services (AWS). This allows for greater scalability and resilience, as each microservice can be independently scaled and updated without affecting the entire application.

However, building microservices on AWS can be a challenging endeavor, and developers must follow best practices to ensure scalability and resilience. In this article, we will discuss the best practices for building Java Microservices on AWS, including choosing the right architecture, using containerization, implementing service discovery, implementing fault tolerance, and ensuring security.

Now let us read about Choosing the right architecture for microservices on AWS.

Choosing the right architecture for microservices on AWS

Choosing the right architecture is critical when it comes to building microservices on AWS. There are several different architectural patterns that developers can choose from, depending on their specific use case and requirements.

One common pattern is the API Gateway pattern, which involves using a single entry point to the system and routing requests to the appropriate microservice. This approach can simplify the overall architecture and make it easier to manage the system as a whole. It can also help to improve security by limiting the number of entry points to the system.

Another pattern is the Service Mesh pattern, which involves adding an additional layer of infrastructure to the system that manages communication between microservices. This can help to improve scalability and resilience by providing a centralized location for managing traffic and implementing features like load balancing and service discovery.

A third pattern is the Event-Driven Architecture pattern, which involves using events to trigger actions across the system. This approach can be useful for systems that need to handle large volumes of data and respond to events in real-time.

When choosing an architecture for microservices on AWS, it’s important to consider factors like scalability, resilience, security, and manageability. Developers should also consider how the architecture will affect the overall performance of the system and how easy it will be to debug and maintain.

Containerization with Docker and Kubernetes

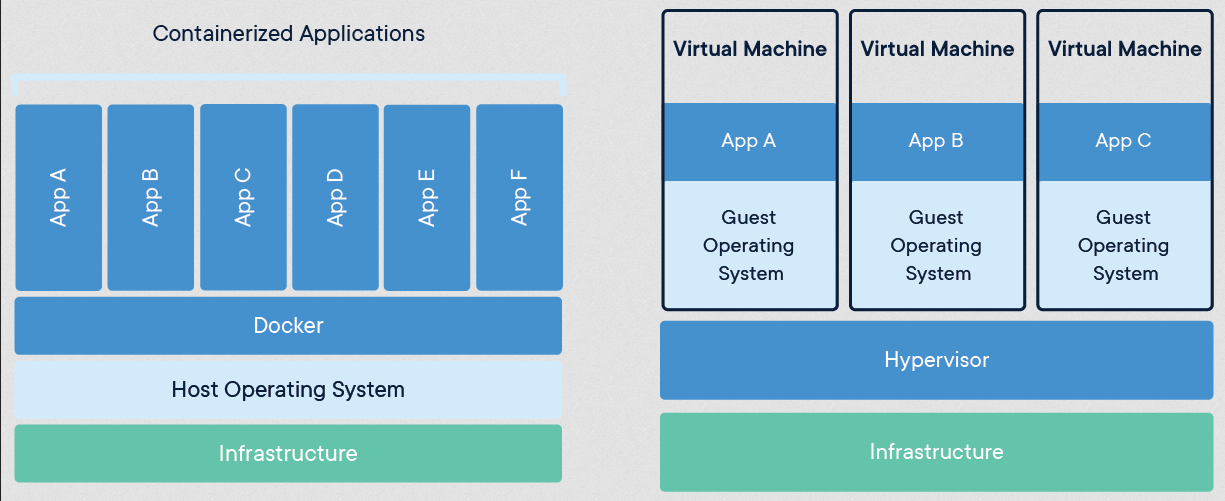

Containerization with Docker and Kubernetes has become a popular approach to building, deploying, and managing applications. Docker is a containerization platform that allows developers to package an application and its dependencies into a container, making it easy to deploy and run consistently across different environments. Kubernetes, on the other hand, is an open-source container orchestration platform that automates deployment, scaling, and management of containerized applications.

Containerization with Docker and Kubernetes provides several benefits for developers and IT operations teams. First, it allows for greater portability of applications, as containers can be easily moved between different environments without the need for significant changes. This makes it easy to develop and test applications locally before deploying them to production.

Second, containerization provides greater consistency in deployment, as the same container can be used across different environments, including development, testing, staging, and production. This helps to reduce the risk of configuration errors and ensures that the application runs as expected in each environment.

Third, containerization provides scalability and resilience, as containers can be easily scaled up or down to meet changes in demand. Kubernetes, in particular, provides powerful scaling capabilities, allowing developers to define rules for scaling based on resource usage, application performance, and other factors.

Implementing service discovery with AWS tools

Service discovery is a critical aspect of building modern cloud-based applications. It is the process of automatically detecting and locating services in a distributed system. Service discovery makes it easier for applications to communicate with each other and ensure that all the components of the application are connected properly.

AWS provides several tools for implementing service discovery, including Amazon Route 53, AWS Cloud Map, and AWS Elastic Load Balancing. In this article, we will discuss each of these tools in detail and explain how they can be used to implement service discovery.

Strategies for fault tolerance in microservices on AWS

Java microservices are small, independent services that are designed to work together to build complex applications. When building microservices, fault tolerance is critical to ensure that the application remains available even when one or more services fail. AWS provides several strategies for implementing fault tolerance in Java microservices, including:

- Elastic Load Balancing:

AWS Elastic Load Balancing (ELB) is a service that automatically distributes incoming traffic across multiple targets, such as EC2 instances, containers, and IP addresses. ELB supports three types of load balancers: Application Load Balancers, Network Load Balancers, and Classic Load Balancers.By using ELB, you can distribute traffic across multiple instances of a microservice. If one instance fails, the load balancer can automatically route traffic to healthy instances, ensuring that the service remains available. - Auto Scaling:

AWS Auto Scaling is a service that automatically scales your resources up or down based on demand. By using Auto Scaling, you can ensure that you always have the right number of instances of a microservice running to handle the current level of traffic.

Auto Scaling can also be used to replace failed instances automatically. When an instance fails, Auto Scaling can launch a new instance to replace it, ensuring that the service remains available. - Amazon RDS Multi-AZ:

Amazon Relational Database Service (RDS) Multi-AZ provides high availability and fault tolerance for your database. Multi-AZ creates a secondary copy of your database in a different Availability Zone, ensuring that your database remains available even if the primary database fails.

By using RDS Multi-AZ, you can ensure that your microservices can continue to access the database even if one of the database instances fails. - Circuit Breaker pattern:

The Circuit Breaker pattern is a design pattern that can be used to handle faults in microservices. The pattern works by wrapping calls to a microservice with a circuit breaker.

If the microservice fails, the circuit breaker trips and returns a predefined response instead of calling the microservice. This prevents cascading failures and allows the microservice to recover without affecting other services. - Graceful degradation:

Graceful degradation is a technique that can be used to handle failures in microservices. With graceful degradation, the microservice degrades its functionality gracefully when a failure occurs, instead of completely failing.

For example, if a microservice that provides real-time stock prices fails, it can still provide the last-known stock price, rather than returning an error. This ensures that the application remains available, even when a microservice fails.

Best practices for scalability and resilience in Java Microservices on AWS

Java microservices are small, independent services that can be scaled independently to build complex applications using Java. When building microservices on AWS, it’s important to follow best practices for scalability and resilience to ensure that your application can handle increased traffic and stay available even when one or more services fail.

Here are some best practices to follow when building Java microservices on AWS:

- Use Auto Scaling:

AWS Auto Scaling is a service that can automatically scale your resources up or down based on demand. By using Auto Scaling, you can ensure that you always have the right number of instances of a microservice running to handle the current level of traffic.Auto Scaling can also be used to replace failed instances automatically. When an instance fails, Auto Scaling can launch a new instance to replace it, ensuring that the service remains available. - Use a distributed cache:

A distributed cache can help improve the performance and scalability of microservices by reducing the load on the database. By caching frequently accessed data in memory, you can reduce the number of database queries and improve the response time of your microservices.AWS provides several caching services, such as Amazon ElastiCache, which is a fully managed in-memory data store that can be used to cache data from popular databases, such as Amazon RDS and Amazon DynamoDB. - Use a container orchestration service:

AWS provides several container orchestration services, such as Amazon ECS and Amazon EKS, that can be used to manage and deploy containerized microservices at scale. By using a container orchestration service, you can easily deploy and manage multiple instances of a microservice across multiple availability zones, ensuring high availability and fault tolerance. - Implement a circuit breaker pattern:

The Circuit Breaker pattern is a design pattern that can be used to handle faults in microservices. The pattern works by wrapping calls to a microservice with a circuit breaker. If the microservice fails, the circuit breaker trips and returns a predefined response instead of calling the microservice. This prevents cascading failures and allows the microservice to recover without affecting other services. - Use a load balancer:

AWS Elastic Load Balancing (ELB) is a service that can automatically distribute incoming traffic across multiple instances of a microservice. By using ELB, you can ensure that your microservices can handle increased traffic without becoming overloaded. - Use a monitoring and logging solution:

AWS provides several monitoring and logging services, such as Amazon CloudWatch and AWS CloudTrail, that can be used to monitor the performance and health of your microservices. By using these services, you can identify and troubleshoot issues before they become critical, ensuring high availability and resilience.

Conclusion

- In conclusion, building the Java microservices on AWS requires following best practices for scalability and resilience to ensure that your application can handle increased traffic and stay available even when one or more services fail. These best practices include using Auto Scaling, a distributed cache, a container orchestration service, implementing the circuit breaker pattern, using a load balancer, and using a monitoring and logging solution.

- By implementing these best practices, you can build highly scalable and resilient microservices that can handle increased traffic and remain available even when one or more services fail. This is essential for ensuring that your application can meet the demands of your users and provide a reliable user experience. Additionally, following these best practices can help you identify and troubleshoot issues before they become critical, ensuring that your application remains highly available and resilient over time.

Leave a Reply